What's the frame OpenCV uses?

I have two cameras, monocular and stereo. I calibrated both and they're mounted at a fix robot arm.

However, I cannot backproject. I take a world point XYZ, and an image from each camera, when I do the backprojection, I get nonsensical coordinates, which means the frames are not matching.

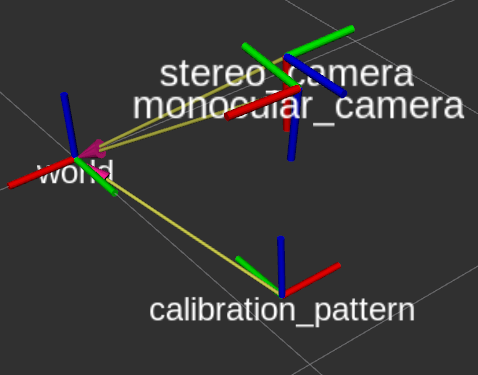

I wanted to break it down one by one, so I started with my stereo_camera, which uses X axis facing downwards, on the contrary to my monocular which uses Z to face down. I suspect having only monocular_camera_frame and stereo_camera_frame are not enough for backprojection to work. I don't know what OpenCV uses, but I feel like one has to create another frame, say, stereo_camera_image_frame which would correspond to what OpenCV thinks XYZ are. Here is how they look like on a visualiser I use (to help understanding what I am talking about):

And here is to code I use to backproject:

calibration_pattern_fworld = np.asarray([-0.27044, 0.55044, 0]) # point in real world

calibration_pattern_fmonocular = np.asarray([2648, 2132 , 0]) # same point in the image from the monocular camera

calibration_pattern_fstereo = np.asarray([437, 253 , 0]) # same point in the image from the stereo_camera

def from_image_to_world(subscribed_transforms, image_coordinates_xyz, isStereo):

if (isStereo):

# stereo camera transformations

transformed_coordinate = transform_point(image_coordinates_xyz, subscribed_transforms[SourceTargetType(source="stereo_camera", target="calibration_pattern")])

stereo_camera_model = image_geometry.StereoCameraModel()

stereo_camera_info = lazyros.basics.receive_one_message("/nerian_stereo/stereo_camera_info")

stereo_camera_model.fromCameraInfo(stereo_camera_info.left_info, stereo_camera_info.right_info)

world_coordinates_xy = stereo_camera_model.project3dToPixel(transformed_coordinate)

else:

# basler camera transformations

transformed_coordinate = transform_point(image_coordinates_xyz, subscribed_transforms[SourceTargetType(source="monocular_camera", target="calibration_pattern")])

camera_model = image_geometry.PinholeCameraModel()

camera_info = lazyros.basics.receive_one_message("/pylon_camera_node/camera_info")

camera_model.fromCameraInfo(camera_info)

camera_model.tfFrame()

world_coordinates_xy = camera_model.project3dToPixel(transformed_coordinate)

return world_coordinates_xy

Ignore all ROS-dependent stuff, they're not relevant, I kept them in the code so that it looks consistent. What matters is where the camera model is assigned and the function project3dToPixel() is used. Taking a point from each image using a third-party software like GIMP, and then using the above function yields nonsensical values.

Given all this, how do we know if the camera we use has the same frame understanding with OpenCV? Because pixel output don't really match with the real measurement once backprojected, and vice versa.