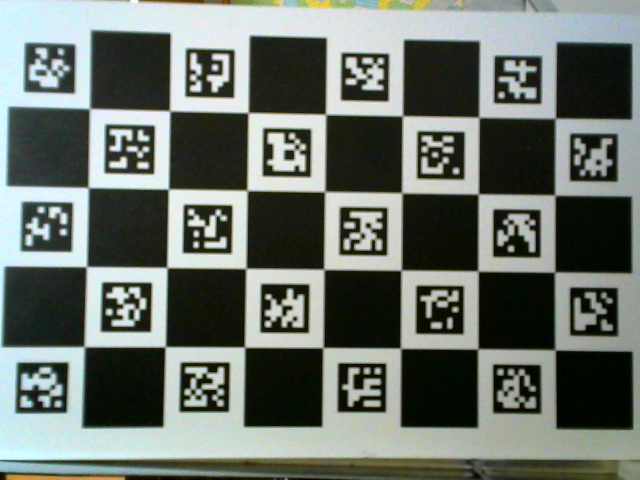

Charuco calibration

In calibrateCameraCharuco only interpolated chessboard corners are used, aruco corners are not used Why ?

if you add aruco corner to calibration rms is really bad

import numpy as np

import cv2 as cv

def permutation_taille(pts_aruco):

for ir in range(len(pts_aruco)):

d1, d2, d3 = pts_aruco[ir].shape

pts_aruco[ir] = pts_aruco[ir].reshape((d2, d1, d3))

return pts_aruco

detectorParams = cv.aruco.DetectorParameters_create()

detectorParams.cornerRefinementMethod = cv.aruco.CORNER_REFINE_SUBPIX

detectorParams.cornerRefinementMinAccuracy = 0.01

NBLIGNE = 8

NBCOLONNE = 5

DICO = 14

dico = cv.aruco.Dictionary_get(DICO)

board= cv.aruco.CharucoBoard_create(NBLIGNE, NBCOLONNE, 0.03455, 0.02164, dico)

img = board.draw((1000,1000), 50, 50)

img = cv.imread("imgL.png",cv.IMREAD_GRAYSCALE)

cv.imshow('ChaRuCo', img)

cv.waitKey()

pts_objets = []

pts_camera = []

frame = img.copy()

frame = cv.cvtColor(frame, cv.COLOR_GRAY2BGR)

pts_aruco, ids, refus = cv.aruco.detectMarkers(image=img, dictionary=dico,

parameters=detectorParams)

pts_aruco, ids, refus, recover = cv.aruco.refineDetectedMarkers(

img, board,

pts_aruco, ids,

rejectedCorners=refus)

if recover is not None:

pts_aruco = permutation_taille(pts_aruco)

extract_aruco = True

extract_coin = False

if ids is not None:

retval, coin_charuco, ids_charuco = cv.aruco.interpolateCornersCharuco(

pts_aruco, ids, img, board)

if retval == 0:

print("pb")

exit()

rep_error, cam, dist, rvec, tvec = cv.aruco.calibrateCameraCharuco(

[coin_charuco], [ids_charuco], board, img.shape[0:2],

None, None, None, None, 0)

print("RMS = ", rep_error)

print ("Mat ", cam)

print ("Dist ", dist,)

print ("Pose", rvec, tvec)

cv.aruco.drawDetectedCornersCharuco(

frame, coin_charuco, ids_charuco)

cv.aruco.drawDetectedMarkers(frame, pts_aruco, ids)

pts_reel = None

echiquier = None

if extract_aruco:

for ir in range(len(pts_aruco)):

if echiquier is None:

echiquier = pts_aruco[ir][0]

pts_reel = board.objPoints[ids[ir, 0]]

elif board.ids[ids[ir, 0]]==ids[ir, 0]:

echiquier = np.vstack((echiquier, pts_aruco[ir][0]))

pts_reel = np.vstack((pts_reel, board.objPoints[ids[ir, 0]]))

if extract_coin:

for ir in range(0,ids_charuco.shape[0],3):

index = ids_charuco[ir][0]

if echiquier is None:

echiquier = coin_charuco[ir][0]

pts_reel = board.chessboardCorners[index]

else:

echiquier = np.vstack((echiquier, coin_charuco[ir][0]))

pts_reel = np.vstack((pts_reel, board.chessboardCorners[index]))

pts_reel = pts_reel.reshape((pts_reel.shape[0], 1, 3))

pts_objets.append(pts_reel)

pts_camera.append(echiquier)

else:

print("pb")

exit()

rms, mat_intrin, mat_dist, rvecs, tvecs = \

cv.calibrateCamera(pts_objets, pts_camera, img.shape,

None, None, None, None)

print ("rms ", rms)

print ("Mat ", mat_intrin)

print ("dist ", mat_dist)

print ("Pose", rvecs, tvecs)

cv.imshow("frame",frame)

cv.waitKey()