Bad results with calibration over multiple Images in Python

I'm currently working in a Project to calibrate cameras over multiple Images.

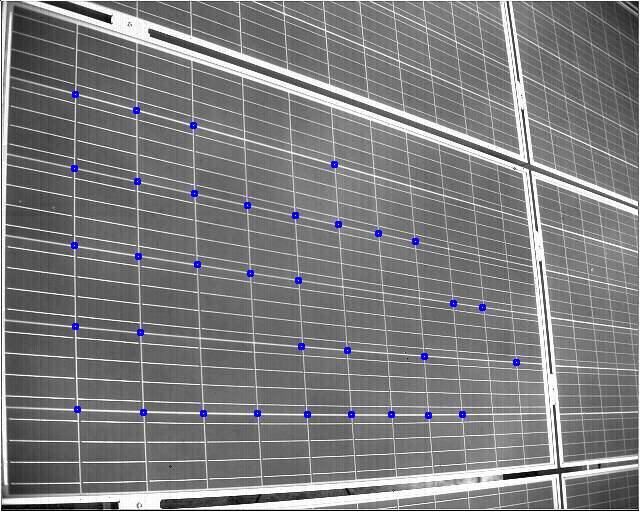

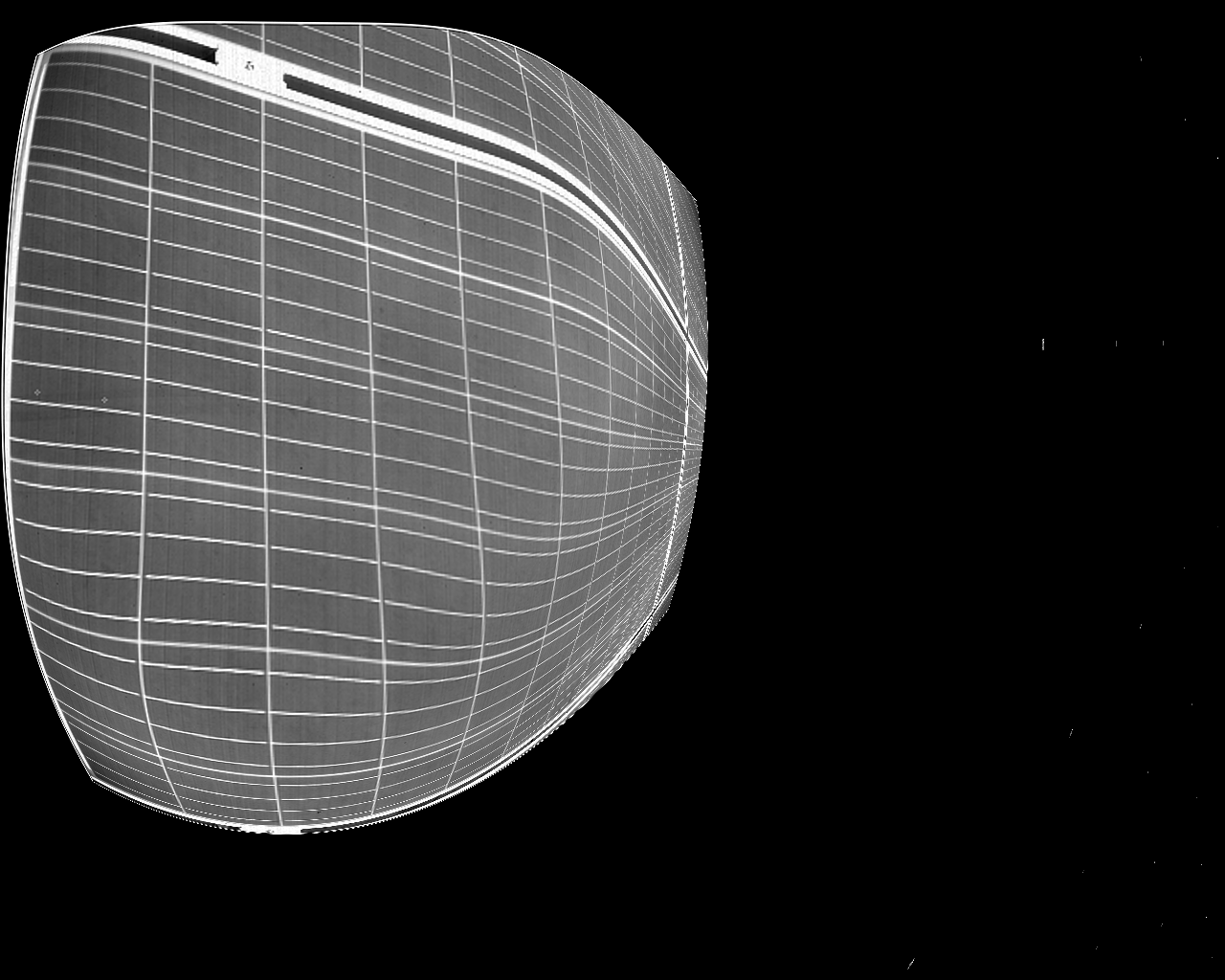

I have images of solarpowercells and my job is to correct the perspective and lens distortion of the image.

Now I have a procedure to get crosspoints inside the cell and am looking to transform the picture through these points on to a fixed grid that I have calculated. I can correct the perspective with them , but not the lens distortion.

I am currently using 12 images for this. In each image I do get a different amount of points.

I am pretty much using this example code: Here

My result sadly is quite bad.

I am using OpenCV Version 3.4.5 and have concatenated the image and object points into two big Arrays. So I have one Nx3 and one Nx2 Array

I'm not sure what I am doing wrong and how to proceed. Since the ROI is all set to 0 I did circumvent the optimized camera matrix for now.

Any hint as to what might be the problem would be greatly appreciated.

Regards, Chris

Here is my Code of the calibration:

objpoints = []

imgpoints = []

z = np.zeros(())

print("starting loop")

for file in glob.glob(dataset):

filename = os.path.basename(file)

print(filename)

to_open = os.path.join(subfolder, filename)

data = np.loadtxt(to_open)

z = np.zeros((data.shape[0],1),np.float32)

#ob = data[:,2:4]

objp = np.hstack( [data[:,4:6], z] )

#objp[:, [1,0]] = objp[:, [0,1]]#np.array(data[:,2],data[:,3],z)

imgp = data[:,0:2]

#imgp[:, [1, 0]] = imgp[:, [0, 1]]

for i in range(0,objp.shape[0]):

objpoints.append((objp[i,0],objp[i,1],objp[i,2]))

imgpoints.append((imgp[i,0], imgp[i,1]))

objpointsa = np.array([objpoints]).astype(np.float32)

imgpointsa = np.array([imgpoints]).astype(np.float32)

ret, mtx, dist, rvecs, tvecs = iman.calibrate(imgpointsa,objpointsa)

print("calibration matrices established")

to_open = os.path.join(subfolder, filename)

img = cv2.imread('1-67-24-cam31_f25_k20_7A-06-03-10-03-24_live.png')

#h, w = img.shape[:2]

h = img.shape[0]

w = img.shape[1]

newcameramtx, roi=cv2.getOptimalNewCameraMatrix(mtx,dist,(w,h),1,(w,h))

# undistort

#dst = cv2.undistort(img, mtx, dist, None, newcameramtx)

dst = cv2.undistort(img, mtx, dist, None, mtx)

print(dst)

# crop the image

#x,y,w,h = roi

#dst = dst[y:y+h, x:x+w]

#dst = iman.drawpoints(dst,np.array([1.0,1.0]),0)

cv2.imwrite('calibresult.png',dst)

thus your img pair should get rejected.

i don't think, you can calibrate successfully, using that data. why not use a chessboard pattern instead ?

+1 on calibrating the camera using a chessboard (or better yet, Charuco board) first. This will let you remove lens distortion, and then you can figure out the perspective distortion.

I would start by: 1. calibrate the camera using a Charuco board, and convince yourself that you can undistort the images well.

2. Manually pick the 4 corner points of a solar cell and compute a homography between those points and your output image corners. Apply perspective transform and convince yourself that it works.

Then try to automate the process somehow. It seems that automatically detecting grid points (and determining world space correspondence) is going to be tricky. (Doable, but will take some work to make robust, I'd think)