Stereo Map Disparity Values

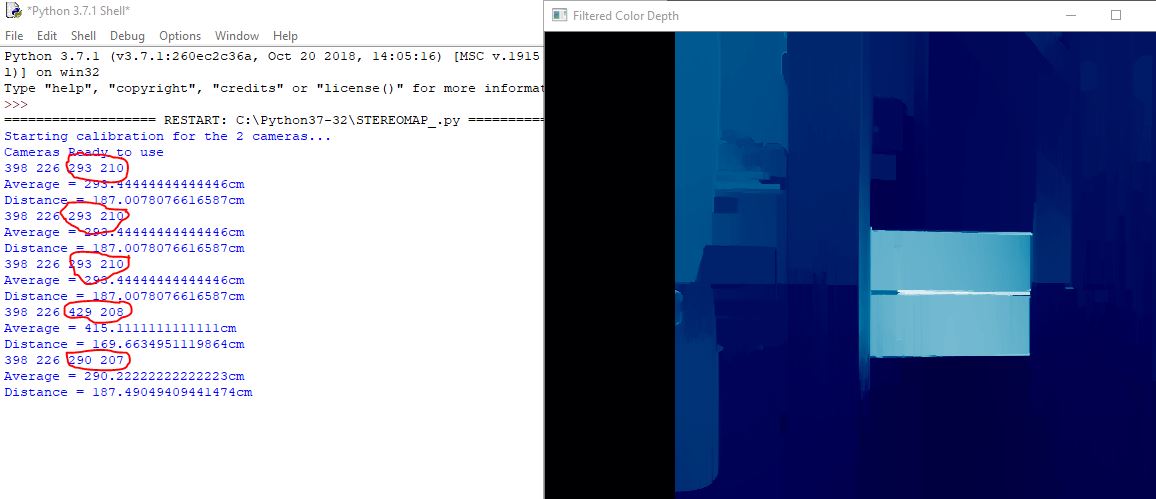

I successfully generated my depth map and printed out some x,y coordinates and its corresponding disparity. Through disparity, we could integrate distance measurement. The problem is, how can I have static disparity values? when I output them, its disparity value fluctuates? Is this normal or i would have some adjustments?

Btw, i am using 2rgb cameras in a live feed

So i click a specific point in the map, (the red circles are the disparity values in an x,y coordinate) As you can see the 4th & 5th circle show different disparity values. It fluctuated from the first 3

str(disp[y,x]), str(filteredImg[y,x] y x coordinate y x in the disparity map