Writting robust (size invariant) circle detection (Watershed)

I want to detect circles with different sizes. The use case is to detect coins on an image and to extract them solely. Quick Summary so far: I use the watershed algorithm but I have probably a problem with threshold. It didn't detect the brighter circles.

It's probably not ideal but I already have described it in a stackoverflow thread and I would be really happy if I could get any more advices: https://stackoverflow.com/questions/5...

I used this watershed algorithm: https://docs.opencv.org/3.4/d3/db4/tu...

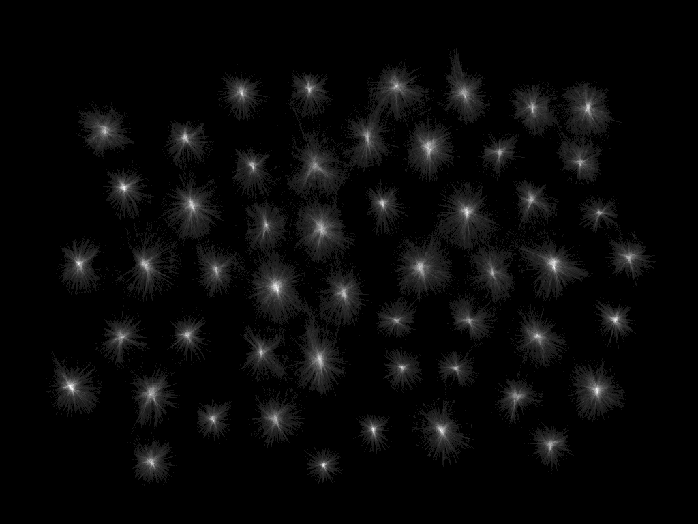

Edit: Example image of my problem.

Edit 2:I tried the radial symmetry transform by kbarni. I used [this] (https://github.com/ceilab/frst_python) implementation but unfortunately I only get a just black output. I have already tried to modify every parameter but it didn't help.

'''

Implementation of fast radial symmetry transform in pure Python using OpenCV and numpy.

Adapted from:

https://github.com/Xonxt/frst

Which is itself adapted from:

Loy, G., & Zelinsky, A. (2002). A fast radial symmetry transform for detecting points of interest. Computer

Vision, ECCV 2002.

'''

import cv2

import numpy as np

def gradx(img):

img = img.astype('int')

rows, cols = img.shape

# Use hstack to add back in the columns that were dropped as zeros

return np.hstack((np.zeros((rows, 1)), (img[:, 2:] - img[:, :-2]) / 2.0, np.zeros((rows, 1))))

def grady(img):

img = img.astype('int')

rows, cols = img.shape

# Use vstack to add back the rows that were dropped as zeros

return np.vstack((np.zeros((1, cols)), (img[2:, :] - img[:-2, :]) / 2.0, np.zeros((1, cols))))

# Performs fast radial symmetry transform

# img: input image, grayscale

# radii: integer value for radius size in pixels (n in the original paper); also used to size gaussian kernel

# alpha: Strictness of symmetry transform (higher=more strict; 2 is good place to start)

# beta: gradient threshold parameter, float in [0,1]

# stdFactor: Standard deviation factor for gaussian kernel

# mode: BRIGHT, DARK, or BOTH

def frst(img, radii, alpha, beta, stdFactor, mode='BOTH'):

mode = mode.upper()

assert mode in ['BRIGHT', 'DARK', 'BOTH']

dark = (mode == 'DARK' or mode == 'BOTH')

bright = (mode == 'BRIGHT' or mode == 'BOTH')

workingDims = tuple((e + 2 * radii) for e in img.shape)

# Set up output and M and O working matrices

output = np.zeros(img.shape, np.uint8)

O_n = np.zeros(workingDims, np.int16)

M_n = np.zeros(workingDims, np.int16)

# Calculate gradients

gx = gradx(img)

gy = grady(img)

# Find gradient vector magnitude

gnorms = np.sqrt(np.add(np.multiply(gx, gx), np.multiply(gy, gy)))

# Use beta to set threshold - speeds up transform significantly

gthresh = np.amax(gnorms) * beta

# Find x/y distance to affected pixels

gpx = np.multiply(np.divide(gx, gnorms, out=np.zeros(gx.shape), where=gnorms != 0),

radii).round().astype(int);

gpy = np.multiply(np.divide(gy, gnorms, out=np.zeros(gy.shape), where=gnorms != 0),

radii).round().astype(int);

# Iterate over all pixels (w/ gradient above threshold)

for coords, gnorm in np ...

Use the Circular Hough transform on the gradient magnitude image.

(...and generally, an example image you are using would be helpful)

Thank you for your advice. I already tried Hough Transform but it is to size sensitive as I would have to change the parameters for every image (which is not possible, the coins are photographed from different heights). Or is it something different on the gradient magnitude image?

There are two parameters for the min/max radius. You can define them so it detects the very small/very large coins. Otherwise use the symmetrical transform (I will write an answer on that below).

What I proposed was a slightly modified version of the Radial Symmetry Transform; try to follow the algorithm I described.

In the code, use just the

if bright:part; and instead of changing onlyO_n[ppve] += 1, increment every pixel betweencoordandppve(draw a line).The

ppvehas to be computed a bit differently; so that the distance betweencoordandppveis constantD(60 pixels should work in the example image).I didn't get it right to follow and implement your algorithm :( I'm very new to python and have some problems.

Maybe you could tell me how I can draw a line with a constant?

I tried this changes but it din't work (I added an image in the question)

# Iterate over all pixels (w/ gradient above threshold) for coords, gnorm in np.ndenumerate(gnorms): if gnorm > gthresh: i, j = coords # Positively affected pixel ppve = (i + gpx[i, j], j + gpy[i, j]) O_n[ppve] += 1 M_n[ppve] += gnorm output = cv2.line(output, coords, ppve, (255, 255, 255), 1)

Don't use

cv2.line, rather a lineiterator. As I see, it's not a part of the Python API, but here is a solution: https://stackoverflow.com/questions/3...I suggest to learn a bit Python and OpenCV, then try to implement the algorithm I described in the answer yourself, instead of trying to find the solution online, and then try to make some modifications.

I tried your apporach again and the results are in my questions. Your suggestion is absolutely right and I want to learn that. But, I'm really under a lot of (time) pressure to get this done :( It's probably not how it this forum is supposed to be, but: Could you may share your code with me, please? I have to get this right :/

I added my code, it's C++; but it shouldn't be hard to rewrite it in Python (but you still need to know Python)...

...and you still need to write your algorithm for local maximum detection.