Strategies for removing aliasing / refresh rate effects

I'm trying to send various 8x8 pixel values to an LED matrix and take a picture at each cycle. This is on a Raspberry Pi 3 using the Sense Hat and a Logitech C920 webam (for now; could use the rpi camera).

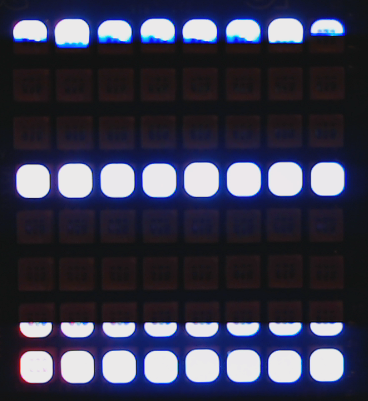

Here's a raw image example:

Somewhere in googling around I found this post about long exposures, so I implemented that strategy. Namely, I can grab n frames and average them. It's not the best, but I get things more like this:

I've done this with my DSLR, but I'm trying to automate. With the DSLR, I just manually tweak the exposure and aperture to get a good shot. I tried that with various cam.set(cv2.CAP_FOO) settings and had a tough time. I opted to just set them directly with subprocess and v4l2-ctl, but I can't seem to get a good setting for exposure_absolute, brightness, and others to get a good picture.

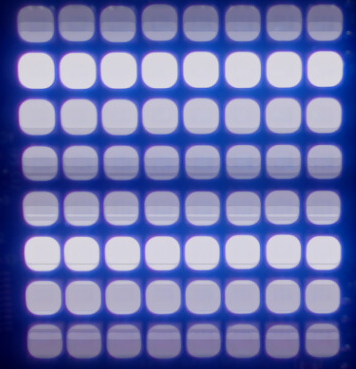

Any suggestions on how to get something cleaner and with good color representation? The below seem overexposed to me. Here's an example of a 16x16 LED matrix shot with my DSLR (what I'd like to see):

Here's how I'm setting up the camera:

def setup_cam():

cam_props = {'brightness': 108, 'contrast': 128, 'saturation': 158,

'gain': 10, 'sharpness': 128, 'exposure_auto': 1,

'exposure_absolute': 100, 'exposure_auto_priority': 0,

'focus_auto': 0, 'focus_absolute': 50, 'zoom_absolute': 150,

'white_balance_temperature_auto': 0, 'white_balance_temperature': 3000}

for key in cam_props:

subprocess.call(['v4l2-ctl -d /dev/video0 -c {}={}'.format(key, str(cam_props[key]))],

shell=True)

subprocess.call(['v4l2-ctl -d /dev/video0 -p 30'], shell=True)

subprocess.call(['v4l2-ctl -d /dev/video0 -l'], shell=True)

cam = cv2.VideoCapture(0)

return cam

Here's the function to take an averaged picture based on that post above:

def take_pic():

r_ave, g_ave, b_ave, total = (0, 0, 0, 0)

for i in range(10):

(ret, frame) = cam.read()

(b, g, r) = cv2.split(frame.astype('float'))

r_ave = ((total * r_ave) + (1 * r)) / (total + 1.0)

g_ave = ((total * g_ave) + (1 * g)) / (total + 1.0)

b_ave = ((total * b_ave) + (1 * b)) / (total + 1.0)

# increment the total number of frames read thus far

total += 1

return cv2.merge([b_ave, g_ave, r_ave]).astype("uint8")

@berak yes, hence pursuing algorithms for bypassing it give that I don't have better hardware to work with :) Could you elaborate on the lower resolution? Or what effect that would have?

i removed the comment, because i realized, that uppping on hardware is probably NOT an option

still, to retrieve the context, : Nyquist

if you lower the resolution, you might get a higher framerate (less pixels to transport)

(or the equivalent thing with v4l2-ctl)

then: do you really have to split/merge the frames for averaging ? (more time spent on processing will only make your problem worse, imho. e.g. addWeighted() or whatever you can do from numpy would save you a lot of copying)

I've seen inklings that lower pixels could have higher frame rates; I haven't figured out how to do this. Might be limited via hardware. For example

v4l2-ctl -d 1 -p 60respondsFrame rate set to 30.000 fps. I'll try it anyway, as maybe just grabbing less would be faster? Alternatively, on my DSLR, I would actually lengthen the exposure to not see the refresh rate. I haven't seenfpsaffect that during capture though. Thanks for the tip onnumpy; I vaguely recall seeing that these could be treated as arrays, but hadn't looked into it.Question. Are you trying to see patterns that are one flash of the LED? Or are they present for multiple flashes?

If it's the second, you might not want to average them, you might want to take the maximum value.

It's a silly project where I want to take a picture file, break it up into 8x8 pixel chunks, send those RGB vals to the LED matrix, take a picture of each, and then use imagemagick

montageto stitch them back together. I did a test run on a DIY 16x16 LED panel I made, but turns out different strips I used had varying red/blue base hues, so I thought this Rpi Sense Hat might be more uniform. In addition, this project was to see if I could automate it (send vals, take pic, then stitch). The last attempt was manually taking DSLR pics! If you're curious here's an example.So you control the LEDs? So you can turn them on as long as you need to get the picture (IE: 1 second)

There's 3 ways to get good results. Take a frame with a short enough exposure that you get all the LEDs lit at the same time, a long exposure so they're all lit for at least part of the time, or combine multiple frames together. Your frame averaging is an example of the third.

If you can't get the exposure up right, try taking the maximum value of say, 15 frames and see if that works.

I do control the LEDs, but the issue isn't how long they're on. They're not changing during the shot; the above examples are me taking pictures while they're static. My interpretation is that it's the refresh rate vs. the shutter speed of the camera. Initially I had it in my head I needed a faster frame rate, but I now think it's the opposite. The exposure setting seems to affect this the most, but too little and the shutter speeds up so I see aliasing. Too much and I don't get the aliasing, but the color washes out as I think it's overexposed. On my DSLR I can dial down the aperture to get the right balance with shutter speed and properly expose things.

"It's a silly project" -- you're not alone

"but the issue isn't how long they're on. They're not changing during the shot" -- maybe it still is. usually, LED's are pulsed by a frequency generator, to make them brighter.

@berak: Woah, I must have not signed in for forever, as I just re-logged in here with my Google ID and it notified me of this. Cool project!! My point was in reply to the suggestion to leave them on while I'm taking the picture, which of course I'm doing. I'm on the same page as you that this is a conflict of shutter speed and refresh rate. I never did solve this...