Why vGPU (Tesla K80) on Google cloud slower than GTX940M on T460P

I am not sure if this question is suitable in this forum. If not, please let me know. I will move it to other forums.

I have a question about the vGPU (Tesla K80) on the VM of Google cloud.

My laptop is Lenovo T460P equiped with below spec:

- CPU: intel i7-6700HQ

- RAM: 16GB

- GPU: nVIDIA GTX 940M (CUDA Cores: 348)

- OS: Windiws 10 Pro 64bit

- OpenCV: ver3.2.0 with CUDA 8.0 support (I downloaded from http://jamesbowley.co.uk/downloads/)

The VM I created in Google Cloud contains the below spec:

- CPU: vCPU x 2

- RAM: 4GB

- HDD: 25GB

- GPU: vGPU (Tesla K80) x 1 (CUDA Cores 4992)

- OS: Ubuntu 16.04 LTS 64bit

- CUDA Driver: I follow the install procedure from this link: https://cloud.google.com/compute/docs...

- OpenCV: ver 3.2.0 (compile parameters:

- cmake -D CMAKE_BUILD_TYPE=RELEASE -D CMAKE_INSTALL_PREFIX=/usr/local -D WITH_CUDA=ON -D WITH_CUBLAS=ON -D WITH_TBB=ON -D CUDA_GENERATION=Auto -D ENABLE_FAST_MATH=1 -D CUDA_FAST_MATH=1 -D WITH_NVCUVID=1 -D WITH_CUFFT=ON -D WITH_EIGEN=ON -D WITH_IPP=ON )

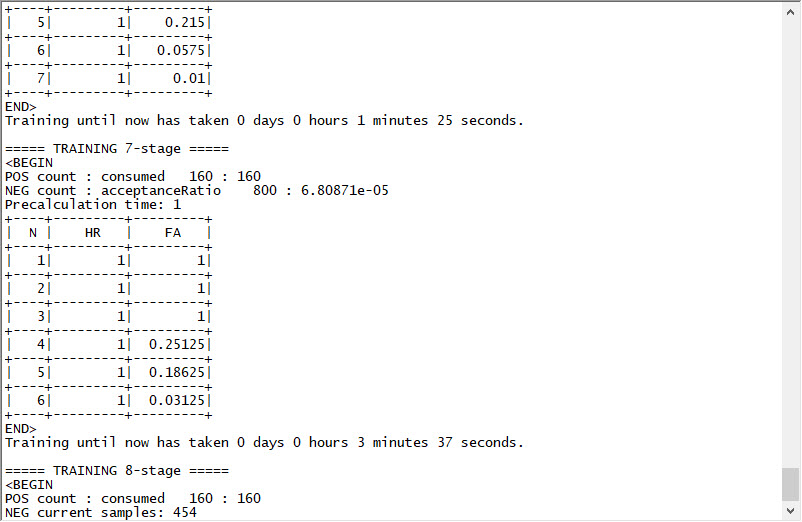

I use opencv to train LBP cascade with 160 positive images and 800 negative images. I use the same positive and negative samples on both above environments Below is the parameters of opencv_createsamples and opencv_traincascade:

opencv_createsamples -info data/positive_images/positives.txt -vec data/positive_images/positives.vec -w 32 -h 32

opencv_traincascade -data classifier -vec data/positive_images/positives.vec -bg data/negative_images/negatives.txt -mode BASIC -featureType LBP -numPos 160 -numNeg 800 -minHitRate 0.998 -maxFalseAlarmRate 0.05 -w 32 -h 32 -numStages 10

However, I found that my laptop took 5 minutes around to finish the training. and the vm of Google cloud took over 20 minutes and was still in TRAINING 8-stage.

What's the matter with the vm of Google cloud ? I thought that the vm of Google cloud should have computed faster than that of my laptop because the vm has a Tesla K80. But it actually is far slow than my laptop.

Did I miss something or do something wrong on the vm of Google cloud ?

Thanks for help.

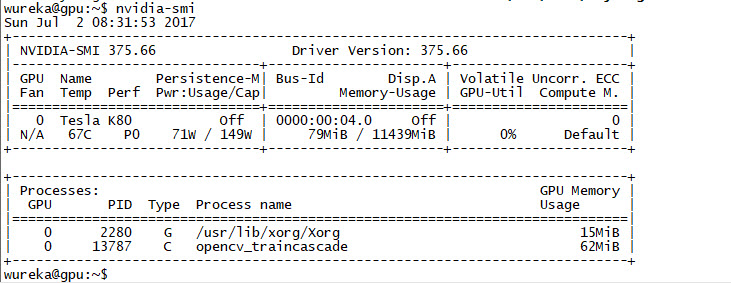

I'm not actually sure there is a GPU portion for that classifier...

The nvidia-smi report, I'm not very familiar with it, but isn't that just the default process? It's not using it for computation?