Can not distinguish two insects by using SIFT

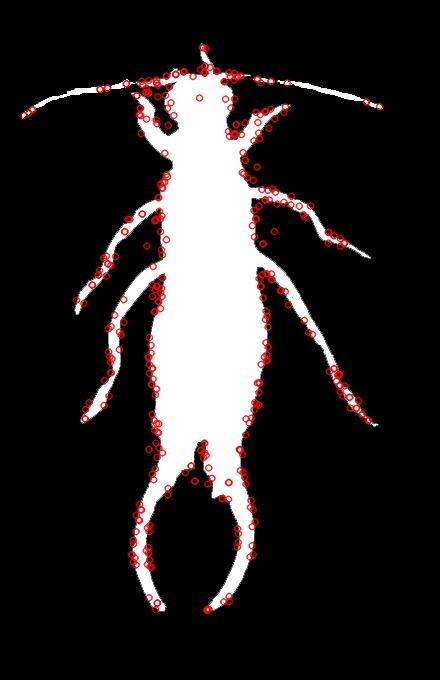

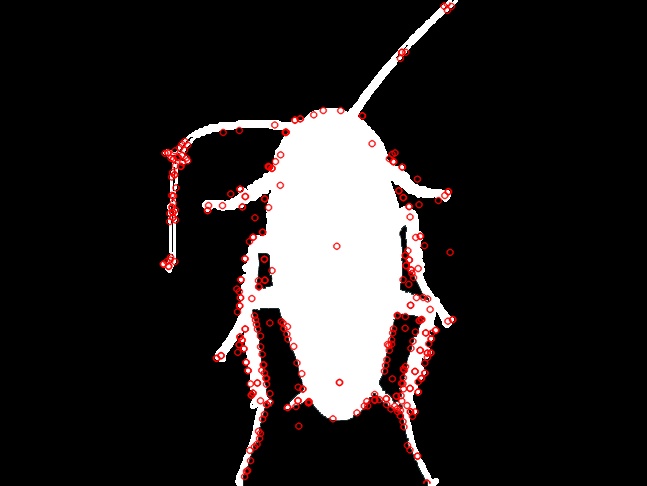

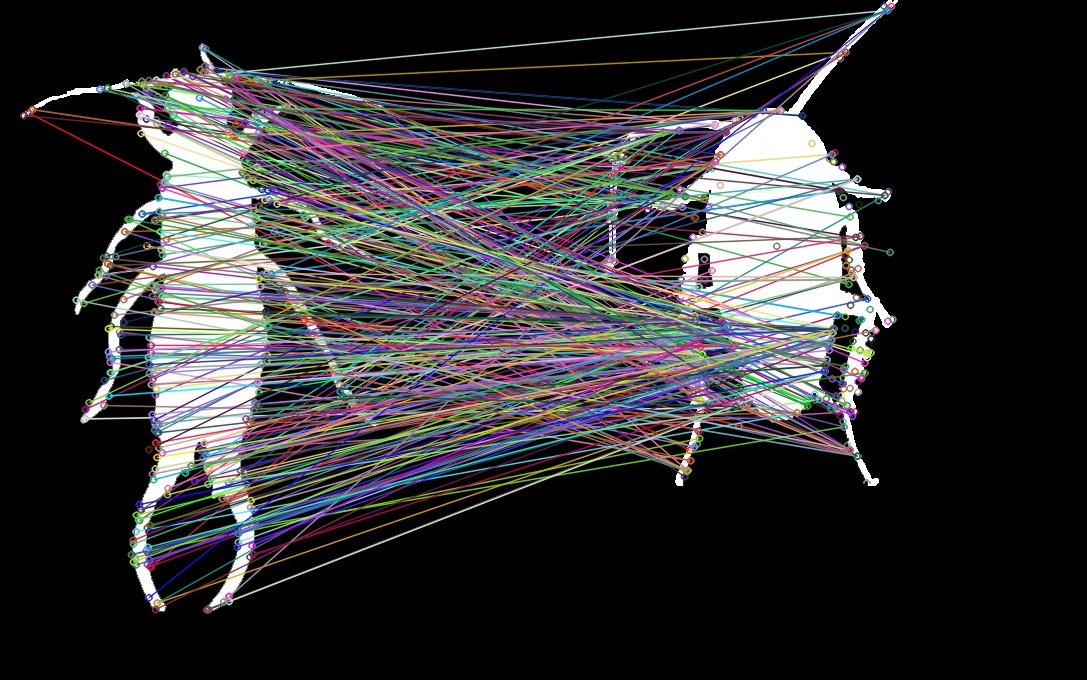

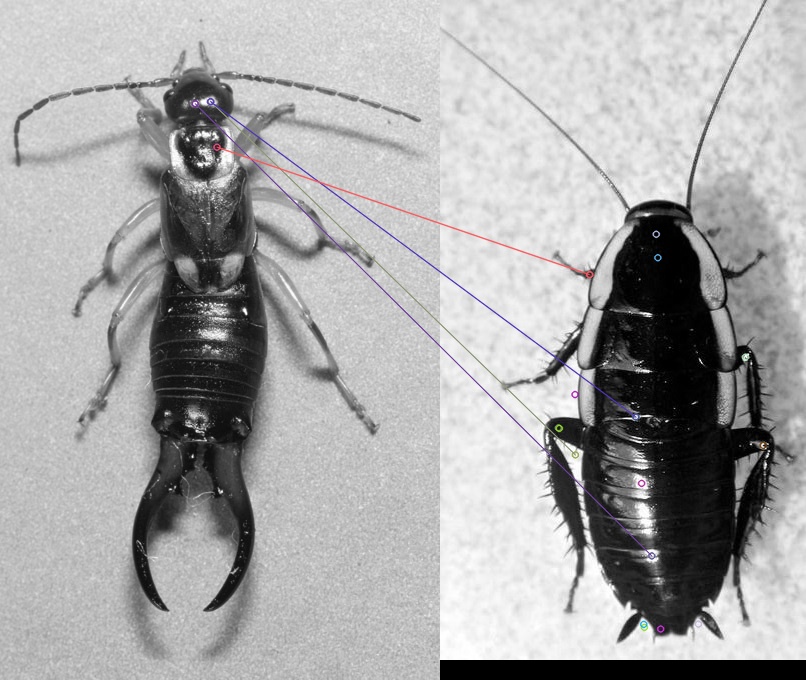

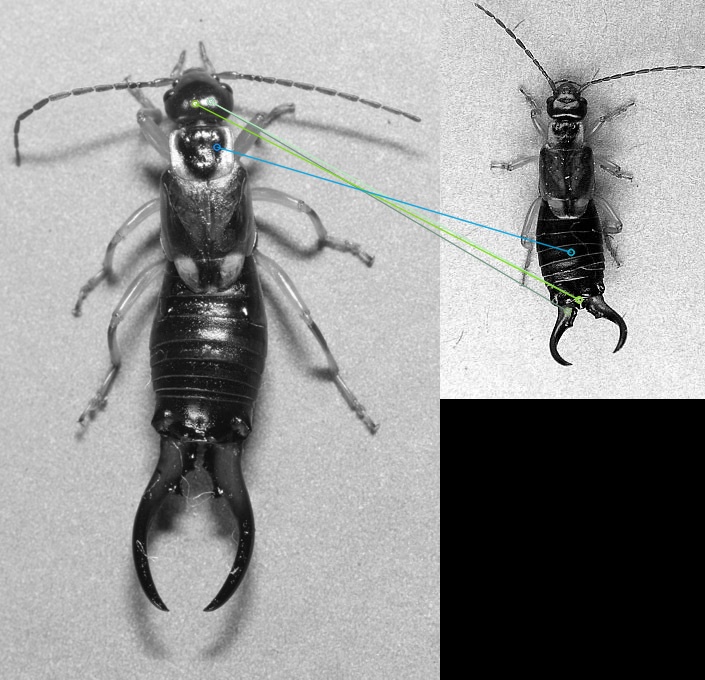

I wan to create a classifier in order to identify an insect by its captured image. At the first time, I used HuMomemnts but images captured in different resolutions gave incorrect results since HuMoments are scale variant. After doing some search on the internet, I found that usage SIFT and SURF can solve my problem and thus, I tried to see what happens when I use SIFT. The first two images below belongs to to different insect kind. The results was bizarre since all features out of 400 were matching (see 3rd image).

int main()

{

Mat src = imread(firstInsect);

Mat src2 = imread("secondInsect");

if(src.empty() || src2.empty())

{

printf("Can not read one of the image\n");

return -1;

}

//Detect key point in the image

SiftFeatureDetector detector(400);

vector<KeyPoint> keypoints;

detector.detect(src, keypoints);

//cout << keypoints.size() << " of keypoints are found" << endl;

cv::FileStorage fs(firstInsectXML, FileStorage::WRITE);

detector.write(fs);

fs.release();

SiftFeatureDetector detector2(400);

vector<KeyPoint> keypoints2;

detector.detect(src2, keypoints2);

cv::FileStorage fs2(secondInsectXML, FileStorage::WRITE);

detector.write(fs2);

fs2.release();

//Compute the SIFT feature descriptors for the keypoints

//Multiple features can be extracted from a single keypoint, so the result is a

//matrix where row "i" is the list of features for keypoint "i"

SiftDescriptorExtractor extractor;

Mat descriptors;

extractor.compute(src, keypoints, descriptors);

SiftDescriptorExtractor extractor2;

Mat descriptors2;

extractor.compute(src2, keypoints2, descriptors2);

//Print some statistics on the matrices returned

//Size size = descriptors.size();

//cout<<"Query descriptors height: "<<size.height<< " width: "<<size.width<< " area: "<<size.area() << " non-zero: "<<countNonZero(descriptors)<<endl;

//saveKeypoints(keypoints, detector);

Mat output;

drawKeypoints(src, keypoints, output, Scalar(0, 0, 255), DrawMatchesFlags::DEFAULT);

imwrite(firstInsectPicture, output);

Mat output2;

drawKeypoints(src2, keypoints2, output2, Scalar(0, 0, 255), DrawMatchesFlags::DEFAULT);

imwrite(secondInsectPicture, output2);

//Corresponded points

BFMatcher matcher(NORM_L2);

vector<DMatch> matches;

matcher.match(descriptors, descriptors2, matches);

cout<< "Number of matches: "<<matches.size()<<endl;

Mat img_matches;

drawMatches(src, keypoints, src2, keypoints2, matches, img_matches);

imwrite(resultPicture, img_matches);

system("PAUSE");

waitKey(10000);

return 0;

}

Question 1: Why all of the features are matching in these two images? Question 2: How can I store(i.e. XML file) features of an image in a way that the features can be stored in order to train them in a classification tree (i.e. random tree)?

EDIT:

Working on grayscale images does not give different results. Matching 2 same kind of insects and matching 2 different kind of insects produces same number of matches.