Disparity Image - Occluded vs. Mismatched Pixels

My stereo algorithm produces left and right disparity maps and identifies invalid pixels in my 'base' (left) image via a left right consistency check. The following is an example pair (though in low light and poor texture, please forgive the poor quality).

To further improve the final disparity image I would like to label invalid pixels as either mismatched or occluded. To label an invalid pixel as occluded there must exist a valid surface of disparity d, exactly d pixels to the right.

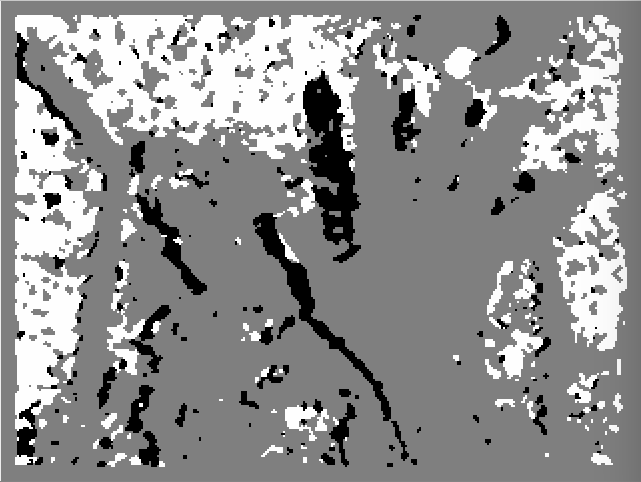

Labeling the invalid pixels looks as follows:

As you can see, this seems to be generally correct (grey=valid, white=mismatch, black=occluded). The next step is to extrapolate valid pixels into the invalid pixels. For mismatches, this can be done from all surrounding valid pixels, however, for occluded pixels, we must extrapolate from the occludee and not the occluder. In the literature it is said to relabel mismatched pixels neighboring occluded pixels as occluded pixels.

For the sake of this question, just assume the results were a little better. Assume I had a fair amount of texture in the images and that I ran something like cv::filterSpeckles on both the left and right images prior to the consistency check. In that case, what would be my path of least resistance to merging mismatched (white) blobs into adjacent (4-way or 8-way) occluded (black) blobs?

Do a two-pass 8-way labelling similar to grassfire transform?