This forum is disabled, please visit https://forum.opencv.org

| 1 | initial version |

I'm going to put the code I perform in order to pre-process a face. I do the following:

//Step 1: detect landmarks over the detected face

vector<cv::Point2d> landmarks = landmark_detector->detectLandmarks(img_gray,Rect(r.x,r.y,r.width,r.height));

//Step 2: align face

Mat aligned_image;

vector<cv::Point2d> aligned_landmarks;

aligner->align(img_gray,aligned_image,landmarks,aligned_landmarks);

//Step 3: normalize region

Mat normalized_region = normalizer->process(aligned_image,Rect(r.x,r.y,r.width,r.height),aligned_landmarks);

//Step 4: tan&&triggs

normalized_region = ((FlandMarkFaceAlign *)normalizer)->tan_triggs_preprocessing(normalized_region, gamma_correct,dog,contrast_eq);

Step 3 tries to get only "face region", and step 4 performs tang&&triggs.

I'm going to put the code for step 1 and 2.

STEP 1

Step 1 calls a landmark detector. Flandmark detector is a good one (better than the one based on cascades for example). Flandmark detector returns the position of the landmarks in the image calling flandmarks, but also does an additional task:

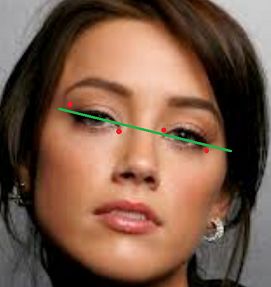

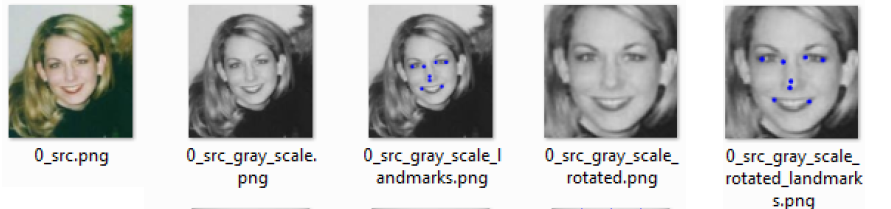

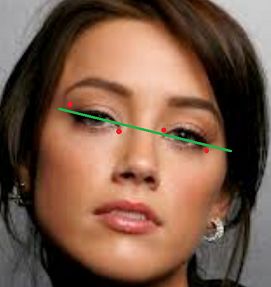

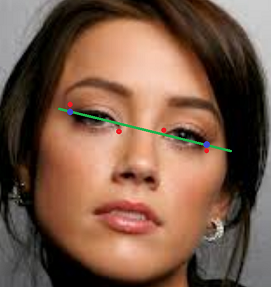

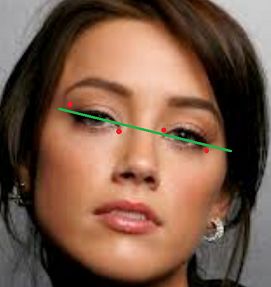

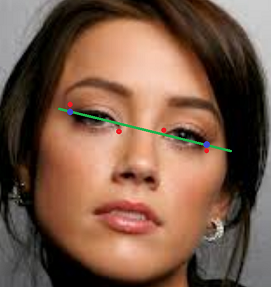

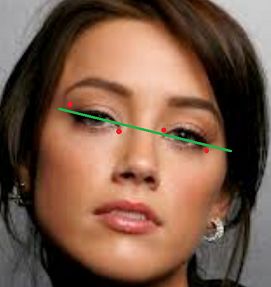

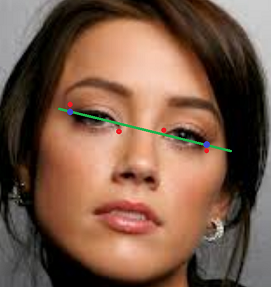

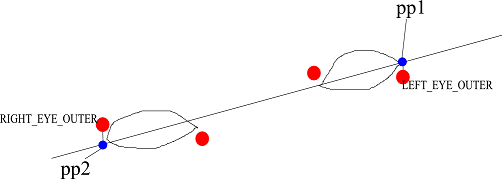

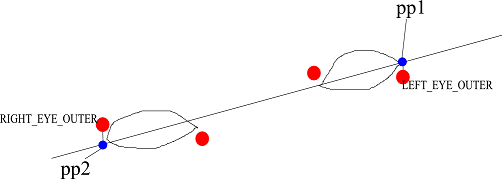

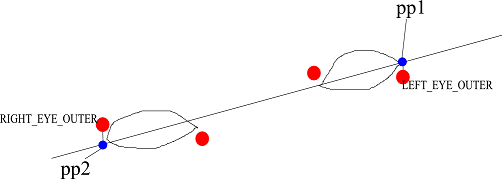

I create a line based on linear regresion using the four points for the eyes (LEFT_EYE_OUTER, LEFT_EYE_INNER, RIGHT_EYE_INNER, RIGHT_EYE_OUTER). Then i calculate two points based on this linear regrestion (the blue ones). (These points are called pp1 and pp2 in the code). I used this linear regresion class.

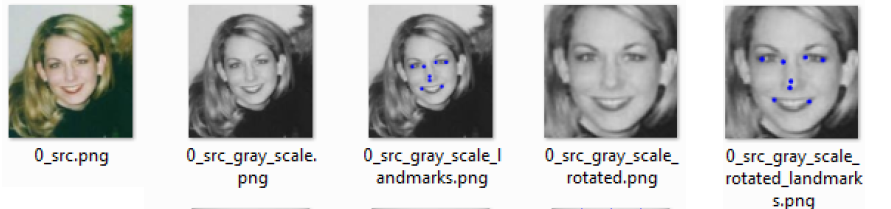

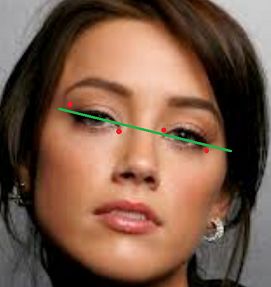

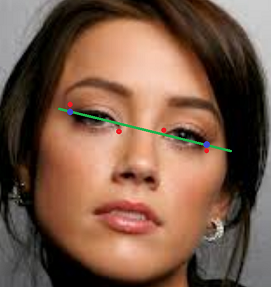

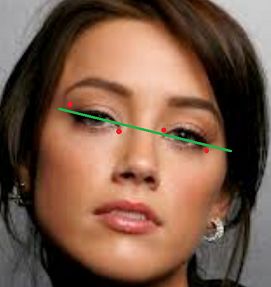

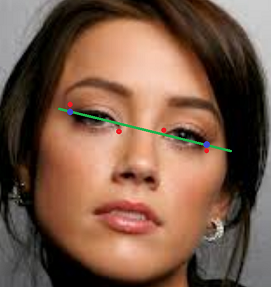

So, with the first step i get the following results:

The code is as follows:

vector<cv::Point2d> FlandmarkLandmarkDetection::detectLandmarks(const Mat & image, const Rect & face){

vector<Point2d> landmarks;

int bbox[4] = { face.x, face.y, face.x + face.width, face.y + face.height };

double *points = new double[2 * this->model->data.options.M];

if(flandmark_detect(new IplImage(image), bbox, this->model,points) > 0){

return landmarks;

}

for (int i = 0; i < this->model->data.options.M; i++) {

landmarks.push_back(Point2f(points[2 * i], points[2 * i + 1]));

}

LinearRegression lr;

lr.addPoint(Point2D(landmarks[LEFT_EYE_OUTER].x,landmarks[LEFT_EYE_OUTER].y));

lr.addPoint(Point2D(landmarks[LEFT_EYE_INNER].x,landmarks[LEFT_EYE_INNER].y));

lr.addPoint(Point2D(landmarks[RIGHT_EYE_INNER].x,landmarks[RIGHT_EYE_INNER].y));

lr.addPoint(Point2D(landmarks[RIGHT_EYE_OUTER].x,landmarks[RIGHT_EYE_OUTER].y));

double coef_determination = lr.getCoefDeterm();

double coef_correlation = lr.getCoefCorrel();

double standar_error_estimate = lr.getStdErrorEst();

double a = lr.getA();

double b = lr.getB();

cv::Point pp1(landmarks[LEFT_EYE_OUTER].x, landmarks[LEFT_EYE_OUTER].x*b+a);

cv::Point pp2(landmarks[RIGHT_EYE_OUTER].x, landmarks[RIGHT_EYE_OUTER].x*b+a);

landmarks.push_back(pp1); //landmarks[LEFT_EYE_ALIGN]

landmarks.push_back(pp2); //landmarks[RIGHT_EYE_ALIGN]

delete[] points;

points = 0;

return landmarks;

}

STEP 2

Step 2 aligns the face. It is based also on https://github.com/MasteringOpenCV/code/blob/master/Chapter8_FaceRecognition/preprocessFace.cpp

The code is as follows:

double FlandMarkFaceAlign::align(const Mat &image, Mat &dst_image, vector<Point2d> &landmarks, vector<Point2d> &dst_landmarks){

const double DESIRED_LEFT_EYE_X = 0.27; // Controls how much of the face is visible after preprocessing.

const double DESIRED_LEFT_EYE_Y = 0.4;

int desiredFaceWidth = 144;

int desiredFaceHeight = desiredFaceWidth;

Point2d leftEye = landmarks[LEFT_EYE_ALIGN];

Point2d rightEye = landmarks[RIGHT_EYE_ALIGN];

// Get the center between the 2 eyes.

Point2f eyesCenter = Point2f( (leftEye.x + rightEye.x) * 0.5f, (leftEye.y + rightEye.y) * 0.5f );

// Get the angle between the 2 eyes.

double dy = (rightEye.y - leftEye.y);

double dx = (rightEye.x - leftEye.x);

double len = sqrt(dx*dx + dy*dy);

double angle = atan2(dy, dx) * 180.0/CV_PI; // Convert from radians to degrees.

// Hand measurements shown that the left eye center should ideally be at roughly (0.19, 0.14) of a scaled face image.

const double DESIRED_RIGHT_EYE_X = (1.0f - DESIRED_LEFT_EYE_X);

// Get the amount we need to scale the image to be the desired fixed size we want.

double desiredLen = (DESIRED_RIGHT_EYE_X - DESIRED_LEFT_EYE_X) * desiredFaceWidth;

double scale = desiredLen / len;

// Get the transformation matrix for rotating and scaling the face to the desired angle & size.

Mat rot_mat = getRotationMatrix2D(eyesCenter, angle, scale);

// Shift the center of the eyes to be the desired center between the eyes.

rot_mat.at<double>(0, 2) += desiredFaceWidth * 0.5f - eyesCenter.x;

rot_mat.at<double>(1, 2) += desiredFaceHeight * DESIRED_LEFT_EYE_Y - eyesCenter.y;

// Rotate and scale and translate the image to the desired angle & size & position!

// Note that we use 'w' for the height instead of 'h', because the input face has 1:1 aspect ratio.

dst_image = Mat(desiredFaceHeight, desiredFaceWidth, CV_8U, Scalar(128)); // Clear the output image to a default grey.

warpAffine(image, dst_image, rot_mat, dst_image.size());

//don't forget to rotate landmarks also!!!

get_rotated_points(landmarks,dst_landmarks, rot_mat);

if(!dst_image.empty()){

//show landmarks

show_landmarks(dst_landmarks,dst_image,"rotate landmarks");

}

return angle;

}

void FlandMarkFaceAlign::get_rotated_points(const std::vector<cv::Point2d> &points, std::vector<cv::Point2d> &dst_points, const cv::Mat &rot_mat){

for(int i=0; i<points.size(); i++){

Mat point_original(3,1,CV_64FC1);

point_original.at<double>(0,0) = points[i].x;

point_original.at<double>(1,0) = points[i].y;

point_original.at<double>(2,0) = 1;

Mat result(2,1,CV_64FC1);

gemm(rot_mat,point_original, 1.0, cv::Mat(), 0.0, result);

Point point_result(cvRound(result.at<double>(0,0)),cvRound(result.at<double>(1,0)));

dst_points.push_back(point_result);

}

}

void FlandMarkFaceAlign::show_landmarks(const std::vector<cv::Point2d> &landmarks, const cv::Mat& image, const string &named_window){

Mat copy_image;

image.copyTo(copy_image);

for(int i=0; i<landmarks.size()-2; i++){

circle(copy_image,landmarks[i], 1,Scalar(255,0,0),2);

}

circle(copy_image,landmarks[LEFT_EYE_ALIGN], 1,Scalar(255,255,0),2);

circle(copy_image,landmarks[RIGHT_EYE_ALIGN], 1,Scalar(255,255,0),2);

}

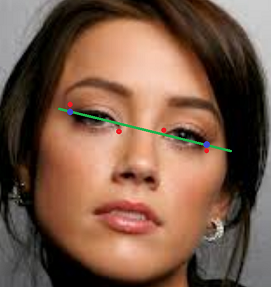

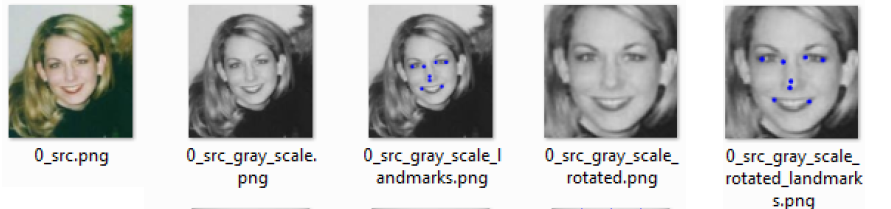

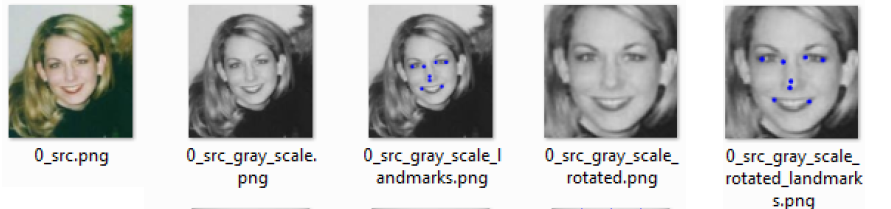

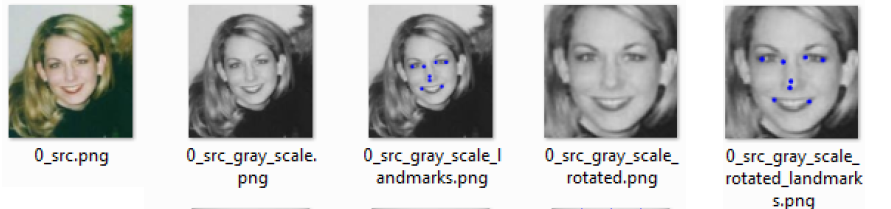

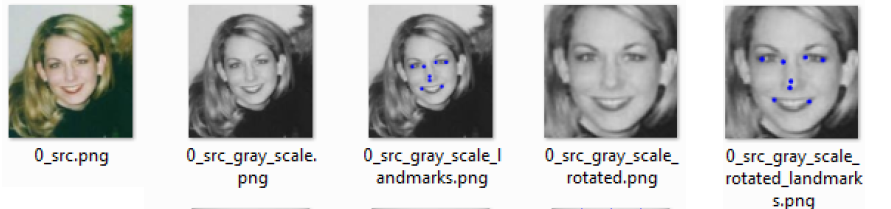

The final result is something like this:

| 2 | No.2 Revision |

I'm going to put the code I perform in order to pre-process a face. I do the following:

//Step 1: detect landmarks over the detected face

vector<cv::Point2d> landmarks = landmark_detector->detectLandmarks(img_gray,Rect(r.x,r.y,r.width,r.height));

//Step 2: align face

Mat aligned_image;

vector<cv::Point2d> aligned_landmarks;

aligner->align(img_gray,aligned_image,landmarks,aligned_landmarks);

//Step 3: normalize region

Mat normalized_region = normalizer->process(aligned_image,Rect(r.x,r.y,r.width,r.height),aligned_landmarks);

//Step 4: tan&&triggs

normalized_region = ((FlandMarkFaceAlign *)normalizer)->tan_triggs_preprocessing(normalized_region, gamma_correct,dog,contrast_eq);

Step 3 tries to get only "face region", and step 4 performs tang&&triggs.

I'm going to put the code for step 1 and 2.

STEP 1

Step 1 calls a landmark detector. Flandmark detector is a good one (better than the one based on cascades for example). Flandmark detector returns the position of the landmarks in the image calling flandmarks, but also does an additional task:

I create a line based on linear regresion using the four points for the eyes (LEFT_EYE_OUTER, LEFT_EYE_INNER, RIGHT_EYE_INNER, RIGHT_EYE_OUTER). Then i calculate two points based on this linear regrestion (the blue ones). (These points are called pp1 and pp2 in the code). I used this linear regresion class.

I USED THESE TWO POINTS TO ROTATE THE FACE --> THESE POINTS ARE MORE ROBUST THAN USING FOR EXAMPLE LEFT_EYE_OUTER/RIGHT_EYE_OUTER OR LEFT_EYE_INNER/RIGHT_EYE_INNER

So, with the first step i get the following results:

The code is as follows:

vector<cv::Point2d> FlandmarkLandmarkDetection::detectLandmarks(const Mat & image, const Rect & face){

vector<Point2d> landmarks;

int bbox[4] = { face.x, face.y, face.x + face.width, face.y + face.height };

double *points = new double[2 * this->model->data.options.M];

if(flandmark_detect(new IplImage(image), bbox, this->model,points) > 0){

return landmarks;

}

for (int i = 0; i < this->model->data.options.M; i++) {

landmarks.push_back(Point2f(points[2 * i], points[2 * i + 1]));

}

LinearRegression lr;

lr.addPoint(Point2D(landmarks[LEFT_EYE_OUTER].x,landmarks[LEFT_EYE_OUTER].y));

lr.addPoint(Point2D(landmarks[LEFT_EYE_INNER].x,landmarks[LEFT_EYE_INNER].y));

lr.addPoint(Point2D(landmarks[RIGHT_EYE_INNER].x,landmarks[RIGHT_EYE_INNER].y));

lr.addPoint(Point2D(landmarks[RIGHT_EYE_OUTER].x,landmarks[RIGHT_EYE_OUTER].y));

double coef_determination = lr.getCoefDeterm();

double coef_correlation = lr.getCoefCorrel();

double standar_error_estimate = lr.getStdErrorEst();

double a = lr.getA();

double b = lr.getB();

cv::Point pp1(landmarks[LEFT_EYE_OUTER].x, landmarks[LEFT_EYE_OUTER].x*b+a);

cv::Point pp2(landmarks[RIGHT_EYE_OUTER].x, landmarks[RIGHT_EYE_OUTER].x*b+a);

landmarks.push_back(pp1); //landmarks[LEFT_EYE_ALIGN]

landmarks.push_back(pp2); //landmarks[RIGHT_EYE_ALIGN]

delete[] points;

points = 0;

return landmarks;

}

STEP 2

Step 2 aligns the face. It is based also on https://github.com/MasteringOpenCV/code/blob/master/Chapter8_FaceRecognition/preprocessFace.cpp

The code is as follows:

double FlandMarkFaceAlign::align(const Mat &image, Mat &dst_image, vector<Point2d> &landmarks, vector<Point2d> &dst_landmarks){

const double DESIRED_LEFT_EYE_X = 0.27; // Controls how much of the face is visible after preprocessing.

const double DESIRED_LEFT_EYE_Y = 0.4;

int desiredFaceWidth = 144;

int desiredFaceHeight = desiredFaceWidth;

Point2d leftEye = landmarks[LEFT_EYE_ALIGN];

Point2d rightEye = landmarks[RIGHT_EYE_ALIGN];

// Get the center between the 2 eyes.

Point2f eyesCenter = Point2f( (leftEye.x + rightEye.x) * 0.5f, (leftEye.y + rightEye.y) * 0.5f );

// Get the angle between the 2 eyes.

double dy = (rightEye.y - leftEye.y);

double dx = (rightEye.x - leftEye.x);

double len = sqrt(dx*dx + dy*dy);

double angle = atan2(dy, dx) * 180.0/CV_PI; // Convert from radians to degrees.

// Hand measurements shown that the left eye center should ideally be at roughly (0.19, 0.14) of a scaled face image.

const double DESIRED_RIGHT_EYE_X = (1.0f - DESIRED_LEFT_EYE_X);

// Get the amount we need to scale the image to be the desired fixed size we want.

double desiredLen = (DESIRED_RIGHT_EYE_X - DESIRED_LEFT_EYE_X) * desiredFaceWidth;

double scale = desiredLen / len;

// Get the transformation matrix for rotating and scaling the face to the desired angle & size.

Mat rot_mat = getRotationMatrix2D(eyesCenter, angle, scale);

// Shift the center of the eyes to be the desired center between the eyes.

rot_mat.at<double>(0, 2) += desiredFaceWidth * 0.5f - eyesCenter.x;

rot_mat.at<double>(1, 2) += desiredFaceHeight * DESIRED_LEFT_EYE_Y - eyesCenter.y;

// Rotate and scale and translate the image to the desired angle & size & position!

// Note that we use 'w' for the height instead of 'h', because the input face has 1:1 aspect ratio.

dst_image = Mat(desiredFaceHeight, desiredFaceWidth, CV_8U, Scalar(128)); // Clear the output image to a default grey.

warpAffine(image, dst_image, rot_mat, dst_image.size());

//don't forget to rotate landmarks also!!!

get_rotated_points(landmarks,dst_landmarks, rot_mat);

if(!dst_image.empty()){

//show landmarks

show_landmarks(dst_landmarks,dst_image,"rotate landmarks");

}

return angle;

}

void FlandMarkFaceAlign::get_rotated_points(const std::vector<cv::Point2d> &points, std::vector<cv::Point2d> &dst_points, const cv::Mat &rot_mat){

for(int i=0; i<points.size(); i++){

Mat point_original(3,1,CV_64FC1);

point_original.at<double>(0,0) = points[i].x;

point_original.at<double>(1,0) = points[i].y;

point_original.at<double>(2,0) = 1;

Mat result(2,1,CV_64FC1);

gemm(rot_mat,point_original, 1.0, cv::Mat(), 0.0, result);

Point point_result(cvRound(result.at<double>(0,0)),cvRound(result.at<double>(1,0)));

dst_points.push_back(point_result);

}

}

void FlandMarkFaceAlign::show_landmarks(const std::vector<cv::Point2d> &landmarks, const cv::Mat& image, const string &named_window){

Mat copy_image;

image.copyTo(copy_image);

for(int i=0; i<landmarks.size()-2; i++){

circle(copy_image,landmarks[i], 1,Scalar(255,0,0),2);

}

circle(copy_image,landmarks[LEFT_EYE_ALIGN], 1,Scalar(255,255,0),2);

circle(copy_image,landmarks[RIGHT_EYE_ALIGN], 1,Scalar(255,255,0),2);

}

The final result is something like this:

| 3 | No.3 Revision |

I'm going to put the code I perform in order to pre-process a face. I do the following:

//Step 1: detect landmarks over the detected face

vector<cv::Point2d> landmarks = landmark_detector->detectLandmarks(img_gray,Rect(r.x,r.y,r.width,r.height));

//Step 2: align face

Mat aligned_image;

vector<cv::Point2d> aligned_landmarks;

aligner->align(img_gray,aligned_image,landmarks,aligned_landmarks);

//Step 3: normalize region

Mat normalized_region = normalizer->process(aligned_image,Rect(r.x,r.y,r.width,r.height),aligned_landmarks);

//Step 4: tan&&triggs

normalized_region = ((FlandMarkFaceAlign *)normalizer)->tan_triggs_preprocessing(normalized_region, gamma_correct,dog,contrast_eq);

Step 3 tries to get only "face region", and step 4 performs tang&&triggs.

I'm going to put the code for step 1 and 2.

STEP 1

Step 1 calls a landmark detector. Flandmark detector is a good one (better than the one based on cascades for example). Flandmark detector returns the position of the landmarks in the image calling flandmarks, but also does an additional task:

I create a line based on linear regresion using the four points for the eyes (LEFT_EYE_OUTER, LEFT_EYE_INNER, RIGHT_EYE_INNER, RIGHT_EYE_OUTER). Then i calculate two points based on this linear regrestion (the blue ones). (These points are called pp1 and pp2 in the code). I used this linear regresion class.

I USED THESE TWO POINTS TO ROTATE THE FACE --> THESE POINTS ARE MORE ROBUST THAN USING FOR EXAMPLE LEFT_EYE_OUTER/RIGHT_EYE_OUTER OR LEFT_EYE_INNER/RIGHT_EYE_INNER

So, with the first step i get the following results:

The code is as follows:

vector<cv::Point2d> FlandmarkLandmarkDetection::detectLandmarks(const Mat & image, const Rect & face){

vector<Point2d> landmarks;

int bbox[4] = { face.x, face.y, face.x + face.width, face.y + face.height };

double *points = new double[2 * this->model->data.options.M];

//http://cmp.felk.cvut.cz/~uricamic/flandmark/

if(flandmark_detect(new IplImage(image), bbox, this->model,points) > 0){

return landmarks;

}

for (int i = 0; i < this->model->data.options.M; i++) {

landmarks.push_back(Point2f(points[2 * i], points[2 * i + 1]));

}

LinearRegression lr;

lr.addPoint(Point2D(landmarks[LEFT_EYE_OUTER].x,landmarks[LEFT_EYE_OUTER].y));

lr.addPoint(Point2D(landmarks[LEFT_EYE_INNER].x,landmarks[LEFT_EYE_INNER].y));

lr.addPoint(Point2D(landmarks[RIGHT_EYE_INNER].x,landmarks[RIGHT_EYE_INNER].y));

lr.addPoint(Point2D(landmarks[RIGHT_EYE_OUTER].x,landmarks[RIGHT_EYE_OUTER].y));

double coef_determination = lr.getCoefDeterm();

double coef_correlation = lr.getCoefCorrel();

double standar_error_estimate = lr.getStdErrorEst();

double a = lr.getA();

double b = lr.getB();

cv::Point pp1(landmarks[LEFT_EYE_OUTER].x, landmarks[LEFT_EYE_OUTER].x*b+a);

cv::Point pp2(landmarks[RIGHT_EYE_OUTER].x, landmarks[RIGHT_EYE_OUTER].x*b+a);

landmarks.push_back(pp1); //landmarks[LEFT_EYE_ALIGN]

landmarks.push_back(pp2); //landmarks[RIGHT_EYE_ALIGN]

delete[] points;

points = 0;

return landmarks;

}

STEP 2

Step 2 aligns the face. It is based also on https://github.com/MasteringOpenCV/code/blob/master/Chapter8_FaceRecognition/preprocessFace.cpp

The code is as follows:

double FlandMarkFaceAlign::align(const Mat &image, Mat &dst_image, vector<Point2d> &landmarks, vector<Point2d> &dst_landmarks){

const double DESIRED_LEFT_EYE_X = 0.27; // Controls how much of the face is visible after preprocessing.

const double DESIRED_LEFT_EYE_Y = 0.4;

int desiredFaceWidth = 144;

int desiredFaceHeight = desiredFaceWidth;

Point2d leftEye = landmarks[LEFT_EYE_ALIGN];

Point2d rightEye = landmarks[RIGHT_EYE_ALIGN];

// Get the center between the 2 eyes.

Point2f eyesCenter = Point2f( (leftEye.x + rightEye.x) * 0.5f, (leftEye.y + rightEye.y) * 0.5f );

// Get the angle between the 2 eyes.

double dy = (rightEye.y - leftEye.y);

double dx = (rightEye.x - leftEye.x);

double len = sqrt(dx*dx + dy*dy);

double angle = atan2(dy, dx) * 180.0/CV_PI; // Convert from radians to degrees.

// Hand measurements shown that the left eye center should ideally be at roughly (0.19, 0.14) of a scaled face image.

const double DESIRED_RIGHT_EYE_X = (1.0f - DESIRED_LEFT_EYE_X);

// Get the amount we need to scale the image to be the desired fixed size we want.

double desiredLen = (DESIRED_RIGHT_EYE_X - DESIRED_LEFT_EYE_X) * desiredFaceWidth;

double scale = desiredLen / len;

// Get the transformation matrix for rotating and scaling the face to the desired angle & size.

Mat rot_mat = getRotationMatrix2D(eyesCenter, angle, scale);

// Shift the center of the eyes to be the desired center between the eyes.

rot_mat.at<double>(0, 2) += desiredFaceWidth * 0.5f - eyesCenter.x;

rot_mat.at<double>(1, 2) += desiredFaceHeight * DESIRED_LEFT_EYE_Y - eyesCenter.y;

// Rotate and scale and translate the image to the desired angle & size & position!

// Note that we use 'w' for the height instead of 'h', because the input face has 1:1 aspect ratio.

dst_image = Mat(desiredFaceHeight, desiredFaceWidth, CV_8U, Scalar(128)); // Clear the output image to a default grey.

warpAffine(image, dst_image, rot_mat, dst_image.size());

//don't forget to rotate landmarks also!!!

get_rotated_points(landmarks,dst_landmarks, rot_mat);

if(!dst_image.empty()){

//show landmarks

show_landmarks(dst_landmarks,dst_image,"rotate landmarks");

}

return angle;

}

void FlandMarkFaceAlign::get_rotated_points(const std::vector<cv::Point2d> &points, std::vector<cv::Point2d> &dst_points, const cv::Mat &rot_mat){

for(int i=0; i<points.size(); i++){

Mat point_original(3,1,CV_64FC1);

point_original.at<double>(0,0) = points[i].x;

point_original.at<double>(1,0) = points[i].y;

point_original.at<double>(2,0) = 1;

Mat result(2,1,CV_64FC1);

gemm(rot_mat,point_original, 1.0, cv::Mat(), 0.0, result);

Point point_result(cvRound(result.at<double>(0,0)),cvRound(result.at<double>(1,0)));

dst_points.push_back(point_result);

}

}

void FlandMarkFaceAlign::show_landmarks(const std::vector<cv::Point2d> &landmarks, const cv::Mat& image, const string &named_window){

Mat copy_image;

image.copyTo(copy_image);

for(int i=0; i<landmarks.size()-2; i++){

circle(copy_image,landmarks[i], 1,Scalar(255,0,0),2);

}

circle(copy_image,landmarks[LEFT_EYE_ALIGN], 1,Scalar(255,255,0),2);

circle(copy_image,landmarks[RIGHT_EYE_ALIGN], 1,Scalar(255,255,0),2);

}

The final result is something like this:

| 4 | No.4 Revision |

I'm going to put the code I perform in order to pre-process a face. I do the following:

//Step 1: detect landmarks over the detected face

vector<cv::Point2d> landmarks = landmark_detector->detectLandmarks(img_gray,Rect(r.x,r.y,r.width,r.height));

//Step 2: align face

Mat aligned_image;

vector<cv::Point2d> aligned_landmarks;

aligner->align(img_gray,aligned_image,landmarks,aligned_landmarks);

//Step 3: normalize region

Mat normalized_region = normalizer->process(aligned_image,Rect(r.x,r.y,r.width,r.height),aligned_landmarks);

//Step 4: tan&&triggs

normalized_region = ((FlandMarkFaceAlign *)normalizer)->tan_triggs_preprocessing(normalized_region, gamma_correct,dog,contrast_eq);

Step 3 tries to get only "face region", and step 4 performs tang&&triggs.

I'm going to put the code for step 1 and 2.

STEP 1

Step 1 calls a landmark detector. Flandmark detector is a good one (better than the one based on cascades for example). Flandmark detector returns the position of the landmarks in the image calling flandmarks, but also does an additional task:

I create a line based on linear regresion using the four points for the eyes (LEFT_EYE_OUTER, LEFT_EYE_INNER, RIGHT_EYE_INNER, RIGHT_EYE_OUTER). Then i calculate two points based on this linear regrestion (the blue ones). (These points are called pp1 and pp2 in the code). I used this linear regresion class.

I USED THESE TWO POINTS TO ROTATE THE FACE --> THESE POINTS ARE MORE ROBUST THAN USING FOR EXAMPLE LEFT_EYE_OUTER/RIGHT_EYE_OUTER OR LEFT_EYE_INNER/RIGHT_EYE_INNER

So, with the first step i get the following results:

The code is as follows:

vector<cv::Point2d> FlandmarkLandmarkDetection::detectLandmarks(const Mat & image, const Rect & face){

vector<Point2d> landmarks;

int bbox[4] = { face.x, face.y, face.x + face.width, face.y + face.height };

double *points = new double[2 * this->model->data.options.M];

//http://cmp.felk.cvut.cz/~uricamic/flandmark/

if(flandmark_detect(new IplImage(image), bbox, this->model,points) > 0){

return landmarks;

}

for (int i = 0; i < this->model->data.options.M; i++) {

landmarks.push_back(Point2f(points[2 * i], points[2 * i + 1]));

}

LinearRegression lr;

lr.addPoint(Point2D(landmarks[LEFT_EYE_OUTER].x,landmarks[LEFT_EYE_OUTER].y));

lr.addPoint(Point2D(landmarks[LEFT_EYE_INNER].x,landmarks[LEFT_EYE_INNER].y));

lr.addPoint(Point2D(landmarks[RIGHT_EYE_INNER].x,landmarks[RIGHT_EYE_INNER].y));

lr.addPoint(Point2D(landmarks[RIGHT_EYE_OUTER].x,landmarks[RIGHT_EYE_OUTER].y));

double coef_determination = lr.getCoefDeterm();

double coef_correlation = lr.getCoefCorrel();

double standar_error_estimate = lr.getStdErrorEst();

double a = lr.getA();

double b = lr.getB();

cv::Point pp1(landmarks[LEFT_EYE_OUTER].x, landmarks[LEFT_EYE_OUTER].x*b+a);

cv::Point pp2(landmarks[RIGHT_EYE_OUTER].x, landmarks[RIGHT_EYE_OUTER].x*b+a);

landmarks.push_back(pp1); //landmarks[LEFT_EYE_ALIGN]

landmarks.push_back(pp2); //landmarks[RIGHT_EYE_ALIGN]

delete[] points;

points = 0;

return landmarks;

}

STEP 2

Step 2 aligns the face. It is based also on https://github.com/MasteringOpenCV/code/blob/master/Chapter8_FaceRecognition/preprocessFace.cpp

The code is as follows:

double FlandMarkFaceAlign::align(const Mat &image, Mat &dst_image, vector<Point2d> &landmarks, vector<Point2d> &dst_landmarks){

const double DESIRED_LEFT_EYE_X = 0.27; // Controls how much of the face is visible after preprocessing.

const double DESIRED_LEFT_EYE_Y = 0.4;

int desiredFaceWidth = 144;

int desiredFaceHeight = desiredFaceWidth;

Point2d leftEye = landmarks[LEFT_EYE_ALIGN];

Point2d rightEye = landmarks[RIGHT_EYE_ALIGN];

// Get the center between the 2 eyes.

Point2f eyesCenter = Point2f( (leftEye.x + rightEye.x) * 0.5f, (leftEye.y + rightEye.y) * 0.5f );

// Get the angle between the 2 eyes.

double dy = (rightEye.y - leftEye.y);

double dx = (rightEye.x - leftEye.x);

double len = sqrt(dx*dx + dy*dy);

double angle = atan2(dy, dx) * 180.0/CV_PI; // Convert from radians to degrees.

// Hand measurements shown that the left eye center should ideally be at roughly (0.19, 0.14) of a scaled face image.

const double DESIRED_RIGHT_EYE_X = (1.0f - DESIRED_LEFT_EYE_X);

// Get the amount we need to scale the image to be the desired fixed size we want.

double desiredLen = (DESIRED_RIGHT_EYE_X - DESIRED_LEFT_EYE_X) * desiredFaceWidth;

double scale = desiredLen / len;

// Get the transformation matrix for rotating and scaling the face to the desired angle & size.

Mat rot_mat = getRotationMatrix2D(eyesCenter, angle, scale);

// Shift the center of the eyes to be the desired center between the eyes.

rot_mat.at<double>(0, 2) += desiredFaceWidth * 0.5f - eyesCenter.x;

rot_mat.at<double>(1, 2) += desiredFaceHeight * DESIRED_LEFT_EYE_Y - eyesCenter.y;

// Rotate and scale and translate the image to the desired angle & size & position!

// Note that we use 'w' for the height instead of 'h', because the input face has 1:1 aspect ratio.

dst_image = Mat(desiredFaceHeight, desiredFaceWidth, CV_8U, Scalar(128)); // Clear the output image to a default grey.

warpAffine(image, dst_image, rot_mat, dst_image.size());

//don't forget to rotate landmarks also!!!

get_rotated_points(landmarks,dst_landmarks, rot_mat);

if(!dst_image.empty()){

//show landmarks

show_landmarks(dst_landmarks,dst_image,"rotate landmarks");

}

return angle;

}

void FlandMarkFaceAlign::get_rotated_points(const std::vector<cv::Point2d> &points, std::vector<cv::Point2d> &dst_points, const cv::Mat &rot_mat){

for(int i=0; i<points.size(); i++){

Mat point_original(3,1,CV_64FC1);

point_original.at<double>(0,0) = points[i].x;

point_original.at<double>(1,0) = points[i].y;

point_original.at<double>(2,0) = 1;

Mat result(2,1,CV_64FC1);

gemm(rot_mat,point_original, 1.0, cv::Mat(), 0.0, result);

Point point_result(cvRound(result.at<double>(0,0)),cvRound(result.at<double>(1,0)));

dst_points.push_back(point_result);

}

}

void FlandMarkFaceAlign::show_landmarks(const std::vector<cv::Point2d> &landmarks, const cv::Mat& image, const string &named_window){

Mat copy_image;

image.copyTo(copy_image);

for(int i=0; i<landmarks.size()-2; i++){

circle(copy_image,landmarks[i], 1,Scalar(255,0,0),2);

}

circle(copy_image,landmarks[LEFT_EYE_ALIGN], 1,Scalar(255,255,0),2);

circle(copy_image,landmarks[RIGHT_EYE_ALIGN], 1,Scalar(255,255,0),2);

}

The final result is something like this:

| 5 | No.5 Revision |

I'm going to put the code I perform in order to pre-process a face. I do the following:

//Step 1: detect landmarks over the detected face

vector<cv::Point2d> landmarks = landmark_detector->detectLandmarks(img_gray,Rect(r.x,r.y,r.width,r.height));

//Step 2: align face

Mat aligned_image;

vector<cv::Point2d> aligned_landmarks;

aligner->align(img_gray,aligned_image,landmarks,aligned_landmarks);

//Step 3: normalize region

Mat normalized_region = normalizer->process(aligned_image,Rect(r.x,r.y,r.width,r.height),aligned_landmarks);

//Step 4: tan&&triggs

normalized_region = ((FlandMarkFaceAlign *)normalizer)->tan_triggs_preprocessing(normalized_region, gamma_correct,dog,contrast_eq);

Step 3 tries to get only "face region", and step 4 performs tang&&triggs.

I'm going to put the code for step 1 and 2.

STEP 1

Step 1 calls a landmark detector. Flandmark detector is a good one (better than the one based on cascades for example). Flandmark detector returns the position of the landmarks in the image calling flandmarks, but also does an additional task:

I create a line based on linear regresion using the four points for the eyes (LEFT_EYE_OUTER, LEFT_EYE_INNER, RIGHT_EYE_INNER, RIGHT_EYE_OUTER). Then i calculate two points based on this linear regrestion (the blue ones). (These points are called pp1 and pp2 in the code). I used this linear regresion class.

I USED THESE TWO POINTS TO ROTATE THE FACE --> THESE POINTS ARE MORE ROBUST THAN USING FOR EXAMPLE LEFT_EYE_OUTER/RIGHT_EYE_OUTER OR LEFT_EYE_INNER/RIGHT_EYE_INNER

So, with the first step i get the following results:

The code is as follows:

vector<cv::Point2d> FlandmarkLandmarkDetection::detectLandmarks(const Mat & image, const Rect & face){

vector<Point2d> landmarks;

int bbox[4] = { face.x, face.y, face.x + face.width, face.y + face.height };

double *points = new double[2 * this->model->data.options.M];

//http://cmp.felk.cvut.cz/~uricamic/flandmark/

if(flandmark_detect(new IplImage(image), bbox, this->model,points) > 0){

return landmarks;

}

for (int i = 0; i < this->model->data.options.M; i++) {

landmarks.push_back(Point2f(points[2 * i], points[2 * i + 1]));

}

LinearRegression lr;

lr.addPoint(Point2D(landmarks[LEFT_EYE_OUTER].x,landmarks[LEFT_EYE_OUTER].y));

lr.addPoint(Point2D(landmarks[LEFT_EYE_INNER].x,landmarks[LEFT_EYE_INNER].y));

lr.addPoint(Point2D(landmarks[RIGHT_EYE_INNER].x,landmarks[RIGHT_EYE_INNER].y));

lr.addPoint(Point2D(landmarks[RIGHT_EYE_OUTER].x,landmarks[RIGHT_EYE_OUTER].y));

double coef_determination = lr.getCoefDeterm();

double coef_correlation = lr.getCoefCorrel();

double standar_error_estimate = lr.getStdErrorEst();

double a = lr.getA();

double b = lr.getB();

cv::Point pp1(landmarks[LEFT_EYE_OUTER].x, landmarks[LEFT_EYE_OUTER].x*b+a);

cv::Point pp2(landmarks[RIGHT_EYE_OUTER].x, landmarks[RIGHT_EYE_OUTER].x*b+a);

landmarks.push_back(pp1); //landmarks[LEFT_EYE_ALIGN]

landmarks.push_back(pp2); //landmarks[RIGHT_EYE_ALIGN]

delete[] points;

points = 0;

return landmarks;

}

STEP 2

Step 2 aligns the face. It is based also on https://github.com/MasteringOpenCV/code/blob/master/Chapter8_FaceRecognition/preprocessFace.cpp

The code is as follows:

double FlandMarkFaceAlign::align(const Mat &image, Mat &dst_image, vector<Point2d> &landmarks, vector<Point2d> &dst_landmarks){

const double DESIRED_LEFT_EYE_X = 0.27; // Controls how much of the face is visible after preprocessing.

const double DESIRED_LEFT_EYE_Y = 0.4;

int desiredFaceWidth = 144;

int desiredFaceHeight = desiredFaceWidth;

Point2d leftEye = landmarks[LEFT_EYE_ALIGN];

Point2d rightEye = landmarks[RIGHT_EYE_ALIGN];

// Get the center between the 2 eyes.

Point2f eyesCenter = Point2f( (leftEye.x + rightEye.x) * 0.5f, (leftEye.y + rightEye.y) * 0.5f );

// Get the angle between the 2 eyes.

double dy = (rightEye.y - leftEye.y);

double dx = (rightEye.x - leftEye.x);

double len = sqrt(dx*dx + dy*dy);

double angle = atan2(dy, dx) * 180.0/CV_PI; // Convert from radians to degrees.

// Hand measurements shown that the left eye center should ideally be at roughly (0.19, 0.14) of a scaled face image.

const double DESIRED_RIGHT_EYE_X = (1.0f - DESIRED_LEFT_EYE_X);

// Get the amount we need to scale the image to be the desired fixed size we want.

double desiredLen = (DESIRED_RIGHT_EYE_X - DESIRED_LEFT_EYE_X) * desiredFaceWidth;

double scale = desiredLen / len;

// Get the transformation matrix for rotating and scaling the face to the desired angle & size.

Mat rot_mat = getRotationMatrix2D(eyesCenter, angle, scale);

// Shift the center of the eyes to be the desired center between the eyes.

rot_mat.at<double>(0, 2) += desiredFaceWidth * 0.5f - eyesCenter.x;

rot_mat.at<double>(1, 2) += desiredFaceHeight * DESIRED_LEFT_EYE_Y - eyesCenter.y;

// Rotate and scale and translate the image to the desired angle & size & position!

// Note that we use 'w' for the height instead of 'h', because the input face has 1:1 aspect ratio.

dst_image = Mat(desiredFaceHeight, desiredFaceWidth, CV_8U, Scalar(128)); // Clear the output image to a default grey.

warpAffine(image, dst_image, rot_mat, dst_image.size());

//don't forget to rotate landmarks also!!!

get_rotated_points(landmarks,dst_landmarks, rot_mat);

if(!dst_image.empty()){

//show landmarks

show_landmarks(dst_landmarks,dst_image,"rotate landmarks");

}

return angle;

}

void FlandMarkFaceAlign::get_rotated_points(const std::vector<cv::Point2d> &points, std::vector<cv::Point2d> &dst_points, const cv::Mat &rot_mat){

for(int i=0; i<points.size(); i++){

Mat point_original(3,1,CV_64FC1);

point_original.at<double>(0,0) = points[i].x;

point_original.at<double>(1,0) = points[i].y;

point_original.at<double>(2,0) = 1;

Mat result(2,1,CV_64FC1);

gemm(rot_mat,point_original, 1.0, cv::Mat(), 0.0, result);

Point point_result(cvRound(result.at<double>(0,0)),cvRound(result.at<double>(1,0)));

dst_points.push_back(point_result);

}

}

void FlandMarkFaceAlign::show_landmarks(const std::vector<cv::Point2d> &landmarks, const cv::Mat& image, const string &named_window){

Mat copy_image;

image.copyTo(copy_image);

for(int i=0; i<landmarks.size()-2; i++){

circle(copy_image,landmarks[i], 1,Scalar(255,0,0),2);

}

circle(copy_image,landmarks[LEFT_EYE_ALIGN], 1,Scalar(255,255,0),2);

circle(copy_image,landmarks[RIGHT_EYE_ALIGN], 1,Scalar(255,255,0),2);

}

The final result is something like this:

I modified the original values of DESIRED_LEFT_EYE_X and DESIRED_LEFT_EYE_Y from here because i want to get all the face. After, this step takes place i perform a "region normalizer" algorithm. that is based in another paper. This algorithm for face normalization is based on this paper: An experimental comparison of gender classification methods . And it is described in Table 1.

And some code was missing. I forgot to put an enum with the landmarks:

enum landmark_pos {

FACE_CENTER = 0,

LEFT_EYE_INNER = 1,

RIGHT_EYE_INNER = 2,

MOUTH_LEFT = 3,

MOUTH_RIGHT = 4,

LEFT_EYE_OUTER = 5,

RIGHT_EYE_OUTER = 6,

NOSE_CENTER = 7,

LEFT_EYE_ALIGN = 8, //pp1

RIGHT_EYE_ALIGN = 9 //pp2

};

| 6 | No.6 Revision |

I'm going to put the code I perform in order to pre-process a face. I do the following:

//Step 1: detect landmarks over the detected face

vector<cv::Point2d> landmarks = landmark_detector->detectLandmarks(img_gray,Rect(r.x,r.y,r.width,r.height));

//Step 2: align face

Mat aligned_image;

vector<cv::Point2d> aligned_landmarks;

aligner->align(img_gray,aligned_image,landmarks,aligned_landmarks);

//Step 3: normalize region

Mat normalized_region = normalizer->process(aligned_image,Rect(r.x,r.y,r.width,r.height),aligned_landmarks);

//Step 4: tan&&triggs

normalized_region = ((FlandMarkFaceAlign *)normalizer)->tan_triggs_preprocessing(normalized_region, gamma_correct,dog,contrast_eq);

Step 3 tries to get only "face region", and step 4 performs tang&&triggs.

I'm going to put the code for step 1 and 2.

STEP 1

Step 1 calls a landmark detector. Flandmark detector is a good one (better than the one based on cascades for example). Flandmark detector returns the position of the landmarks in the image calling flandmarks, but also does an additional task:

I create a line based on linear regresion using the four points for the eyes (LEFT_EYE_OUTER, LEFT_EYE_INNER, RIGHT_EYE_INNER, RIGHT_EYE_OUTER). Then i calculate two points based on this linear regrestion (the blue ones). (These points are called pp1 and pp2 in the code). I used this linear regresion class.

I USED THESE TWO POINTS TO ROTATE THE FACE --> THESE POINTS ARE MORE ROBUST THAN USING FOR EXAMPLE LEFT_EYE_OUTER/RIGHT_EYE_OUTER OR LEFT_EYE_INNER/RIGHT_EYE_INNER

So, with the first step i get the following results:

The code is as follows:

vector<cv::Point2d> FlandmarkLandmarkDetection::detectLandmarks(const Mat & image, const Rect & face){

vector<Point2d> landmarks;

int bbox[4] = { face.x, face.y, face.x + face.width, face.y + face.height };

double *points = new double[2 * this->model->data.options.M];

//http://cmp.felk.cvut.cz/~uricamic/flandmark/

if(flandmark_detect(new IplImage(image), bbox, this->model,points) > 0){

return landmarks;

}

for (int i = 0; i < this->model->data.options.M; i++) {

landmarks.push_back(Point2f(points[2 * i], points[2 * i + 1]));

}

LinearRegression lr;

lr.addPoint(Point2D(landmarks[LEFT_EYE_OUTER].x,landmarks[LEFT_EYE_OUTER].y));

lr.addPoint(Point2D(landmarks[LEFT_EYE_INNER].x,landmarks[LEFT_EYE_INNER].y));

lr.addPoint(Point2D(landmarks[RIGHT_EYE_INNER].x,landmarks[RIGHT_EYE_INNER].y));

lr.addPoint(Point2D(landmarks[RIGHT_EYE_OUTER].x,landmarks[RIGHT_EYE_OUTER].y));

double coef_determination = lr.getCoefDeterm();

double coef_correlation = lr.getCoefCorrel();

double standar_error_estimate = lr.getStdErrorEst();

double a = lr.getA();

double b = lr.getB();

cv::Point pp1(landmarks[LEFT_EYE_OUTER].x, landmarks[LEFT_EYE_OUTER].x*b+a);

cv::Point pp2(landmarks[RIGHT_EYE_OUTER].x, landmarks[RIGHT_EYE_OUTER].x*b+a);

landmarks.push_back(pp1); //landmarks[LEFT_EYE_ALIGN]

landmarks.push_back(pp2); //landmarks[RIGHT_EYE_ALIGN]

delete[] points;

points = 0;

return landmarks;

}

STEP 2

Step 2 aligns the face. It is based also on https://github.com/MasteringOpenCV/code/blob/master/Chapter8_FaceRecognition/preprocessFace.cpp

The code is as follows:

double FlandMarkFaceAlign::align(const Mat &image, Mat &dst_image, vector<Point2d> &landmarks, vector<Point2d> &dst_landmarks){

const double DESIRED_LEFT_EYE_X = 0.27; // Controls how much of the face is visible after preprocessing.

const double DESIRED_LEFT_EYE_Y = 0.4;

int desiredFaceWidth = 144;

int desiredFaceHeight = desiredFaceWidth;

Point2d leftEye = landmarks[LEFT_EYE_ALIGN];

Point2d rightEye = landmarks[RIGHT_EYE_ALIGN];

// Get the center between the 2 eyes.

Point2f eyesCenter = Point2f( (leftEye.x + rightEye.x) * 0.5f, (leftEye.y + rightEye.y) * 0.5f );

// Get the angle between the 2 eyes.

double dy = (rightEye.y - leftEye.y);

double dx = (rightEye.x - leftEye.x);

double len = sqrt(dx*dx + dy*dy);

double angle = atan2(dy, dx) * 180.0/CV_PI; // Convert from radians to degrees.

// Hand measurements shown that the left eye center should ideally be at roughly (0.19, 0.14) of a scaled face image.

const double DESIRED_RIGHT_EYE_X = (1.0f - DESIRED_LEFT_EYE_X);

// Get the amount we need to scale the image to be the desired fixed size we want.

double desiredLen = (DESIRED_RIGHT_EYE_X - DESIRED_LEFT_EYE_X) * desiredFaceWidth;

double scale = desiredLen / len;

// Get the transformation matrix for rotating and scaling the face to the desired angle & size.

Mat rot_mat = getRotationMatrix2D(eyesCenter, angle, scale);

// Shift the center of the eyes to be the desired center between the eyes.

rot_mat.at<double>(0, 2) += desiredFaceWidth * 0.5f - eyesCenter.x;

rot_mat.at<double>(1, 2) += desiredFaceHeight * DESIRED_LEFT_EYE_Y - eyesCenter.y;

// Rotate and scale and translate the image to the desired angle & size & position!

// Note that we use 'w' for the height instead of 'h', because the input face has 1:1 aspect ratio.

dst_image = Mat(desiredFaceHeight, desiredFaceWidth, CV_8U, Scalar(128)); // Clear the output image to a default grey.

warpAffine(image, dst_image, rot_mat, dst_image.size());

//don't forget to rotate landmarks also!!!

get_rotated_points(landmarks,dst_landmarks, rot_mat);

if(!dst_image.empty()){

//show landmarks

show_landmarks(dst_landmarks,dst_image,"rotate landmarks");

}

return angle;

}

void FlandMarkFaceAlign::get_rotated_points(const std::vector<cv::Point2d> &points, std::vector<cv::Point2d> &dst_points, const cv::Mat &rot_mat){

for(int i=0; i<points.size(); i++){

Mat point_original(3,1,CV_64FC1);

point_original.at<double>(0,0) = points[i].x;

point_original.at<double>(1,0) = points[i].y;

point_original.at<double>(2,0) = 1;

Mat result(2,1,CV_64FC1);

gemm(rot_mat,point_original, 1.0, cv::Mat(), 0.0, result);

Point point_result(cvRound(result.at<double>(0,0)),cvRound(result.at<double>(1,0)));

dst_points.push_back(point_result);

}

}

void FlandMarkFaceAlign::show_landmarks(const std::vector<cv::Point2d> &landmarks, const cv::Mat& image, const string &named_window){

Mat copy_image;

image.copyTo(copy_image);

for(int i=0; i<landmarks.size()-2; i++){

circle(copy_image,landmarks[i], 1,Scalar(255,0,0),2);

}

circle(copy_image,landmarks[LEFT_EYE_ALIGN], 1,Scalar(255,255,0),2);

circle(copy_image,landmarks[RIGHT_EYE_ALIGN], 1,Scalar(255,255,0),2);

}

The final result is something like this:

I modified the original values of DESIRED_LEFT_EYE_X and DESIRED_LEFT_EYE_Y from here because i want to get all the face. After, this step takes place i perform a "region normalizer" algorithm. that is based in another paper. This algorithm for face normalization is based on this paper: An experimental comparison of gender classification methods . And it is described in Table 1.

And some code was missing. I forgot to put an enum with the landmarks:

enum landmark_pos {

FACE_CENTER = 0,

LEFT_EYE_INNER = 1,

RIGHT_EYE_INNER = 2,

MOUTH_LEFT = 3,

MOUTH_RIGHT = 4,

LEFT_EYE_OUTER = 5,

RIGHT_EYE_OUTER = 6,

NOSE_CENTER = 7,

LEFT_EYE_ALIGN = 8, //pp1

RIGHT_EYE_ALIGN = 9 //pp2

};

Source code can be downloaded from: http://pastebin.com/M0ZwHZ2p